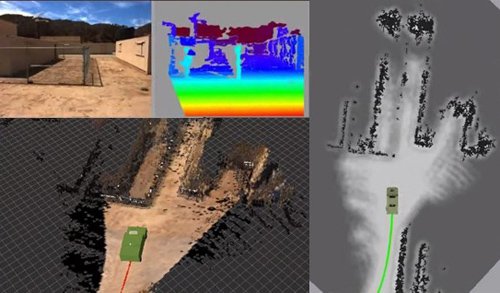

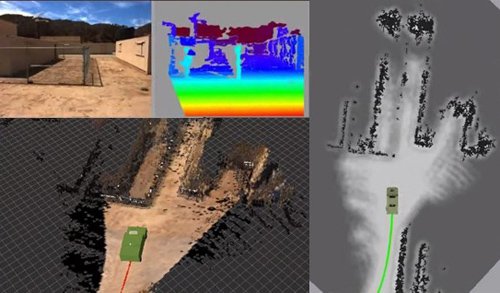

Graphical representation of fused sensory data obtained from the HMMWV test platform at Camp Pendelton.

JPL Robotics is funded by the Office of Naval Research (ONR) to help improve the logistics support of soldiers in the field by replacing manned resupply missions with autonomous unmanned ground vehicles (UGV). In particular, ONR is seeking a low-cost perception system that can transform a small jeep-sized vehicle into an autonomous Logistics Connector UGV. Many autonomous UGV perception systems have been developed without significant attention to production costs. The challenge undertaken in this effort is to make the most of a using multiple, complementary low-cost sensors to achieve similar autonomous navigation performance of a high cost lidar.

Toward this objective, JPL Robotics has performed a sensor trade study of state-of-the-art low-cost sensors, built and delivered a low-cost perception system, and developed the following algorithms: daytime stereo vision, multi-modal sensor processing, positive obstacle detection, ground segmentation, and supervised daytime material classification perception. Recently the first version of the low-cost perception system was field tested at Camp Pendleton against the baseline perception system using an autonomous high-mobility multi-wheeled vehicle (HMMWV). Thus far, the daytime stereo vision, multi-modal sensor processing, positive obstacle detection, and ground segmentation perception capabilities have been accepted into the baseline and will soon undergo verification and validation testing.

Based on performance gaps identified in the first version perception system, a follow up sensor trade study was performed, and a second generation low-cost perception system has been designed. As part of this second generation development effort, JPL Robotics will:

1. build and deliver a second generation low cost perception system

2. develop side slope detection, object segmentation, and dynamic object tracking perception algorithms

3. develop features for traversability analysis, such as surface normals to differentiate rough and smooth terrain, and terrain porosity detection to differentiate terrain that can be pushed through (e.g. tall grass) and terrain that needs to be avoided

4. develop a non-supervised daytime material classification capability for key terrain types

These capabilities will be tested during autonomous navigation on off-road terrain at Camp Pendleton.